Meta’s earnings was fine. But there is almost a certain sense of exhaustion in terms of reaction to Big Tech earnings this quarter so far.

Here are my highlights from today’s call.

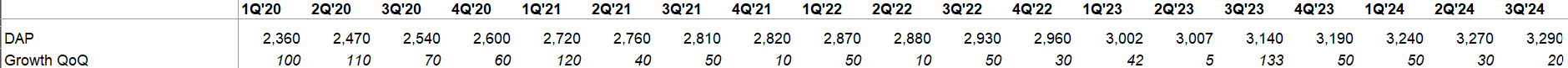

Users

Daily Active People (DAP) across its Family of Apps (FOA) decelerated to 20 mn QoQ in 3Q’24.

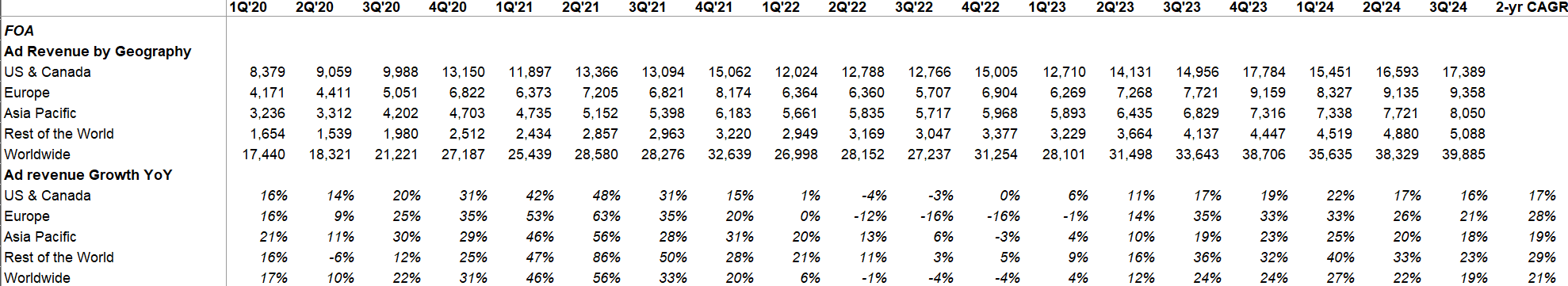

Ad revenue by Geography

Even though easy comp ended in 1Q’24 and 3Q’24 faced a fairly difficult comp, Meta posted quite strong YoY growth rates across regions in 3Q’24.

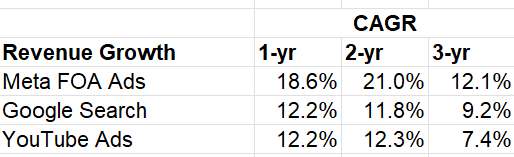

Meta’s Family of Apps (FOA) ads revenue growth is comfortably ahead of both Google Search and YouTube ads in each of the last 1-yr, 2-yr, and 3-yr timeframe. Given the growth levers Meta have today, I reckon they will grow noticeably faster compared to both Google Search and YouTube ads in the next couple of years. More on the growth levers later.

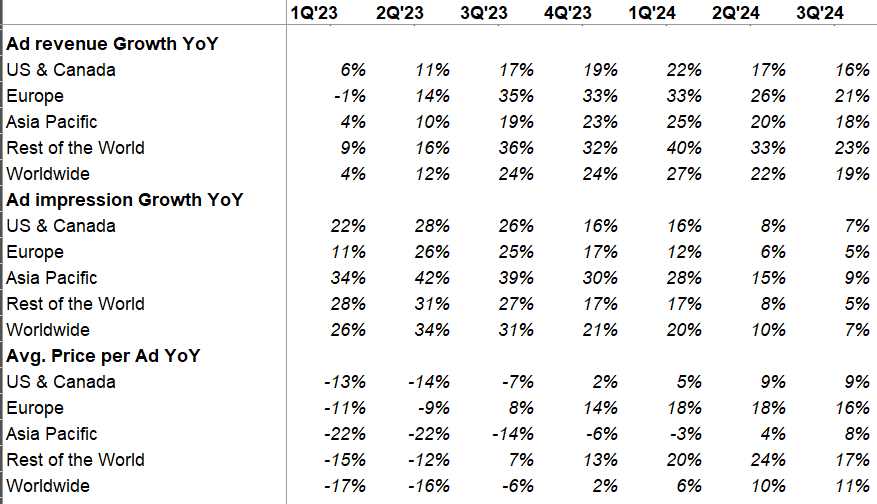

Ad Impression and Avg. Price Per Ad

Overall impression grew by 7% and avg. price per ad grew by 11% YoY. Interestingly, Asia Pacific had the slowest growth in 3Q’24, mostly because it was lapping a period of stronger demand from China-based advertisers. I remember how much airtime these “China-based advertisers” were getting last year from the analysts, and how they may have “inflated” Meta’s ad revenue. Today, this felt almost a trivial point.

Meta’s ad infrastructure is going through some consequential changes which I suspect will keep improving monetization at all the FOA properties over multiple quarters going forward. Some interesting quotes from the call:

Pricing growth was driven by increased advertiser demand, in part due to improved ad performance.

…Similar to organic content ranking, we are finding opportunities to achieve meaningful ads performance gains by adopting new approaches to modeling. For example, we recently deployed new learning and modeling techniques that enable our ad systems to consider the sequence of actions a person takes before and after seeing an ad. Previously, our ad system could only aggregate those actions together without mapping the sequence. This new approach allows our systems to better anticipate how audiences will respond to specific ads. Since we adopted the new models in the first half of this year, we've already seen a 2% to 4% increase in conversions based on testing within selected segments.

In Q3, we introduced changes to our ad ranking and optimization models to take more of the cross-publisher journey into account, which we expect to increase the Meta attributed conversions that advertisers see in their third-party analytics tools.

we care a lot about conversion growth, which…continues to grow faster than impression growth. And are we seeing healthy cost per action or cost per conversion trends. And as long as we continue to get better at driving conversions for advertisers that should have the effect of lifting CPMs over time, because we're delivering more conversions per impression served and that will result in higher value impressions.

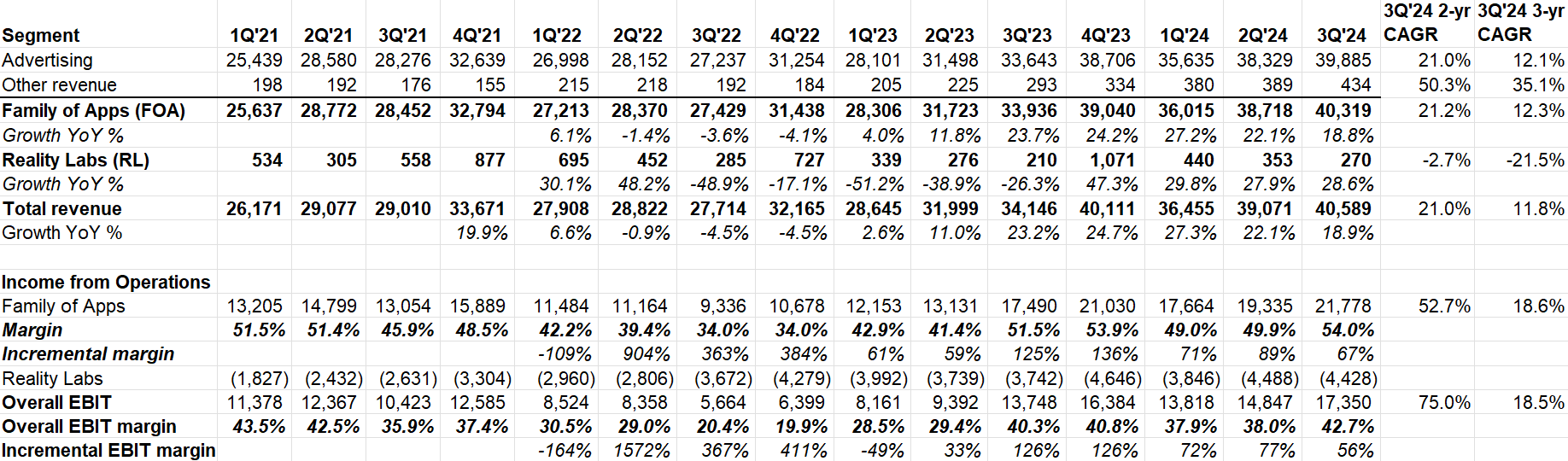

Segment Reporting

Overall 3Q’24 revenue was +18.9% YoY; on a 2-yr and 3-yr CAGR basis, Meta’s topline increased by 21.0% and 11.8% respectively.

FOA’s 3Q’24 operating margin of 54.0% was slightly below its peak operating margin of 54.4% in 4Q’20. Incremental margin at FOA remains super impressive at 67.2% in 3Q’24.

Here’s a funny stat. Meta added $12.9 Billion incremental quarterly revenue in 3Q’24 vs 3Q’22. Their incremental operating income at FOA increased by $12.4 Billion during this time. So, basically they were able to grow their revenue at effectively 100% margin. The magic of zero marginal cost runs deep into Meta’s business.

The less magical segment: Reality Labs (RL) continues to bleed. But an optimistic would say the silver lining here is QoQ losses here declined. Does this mean we are very close to peak RL losses? Meta was asked this question, but didn’t answer definitively. My guess is they may provide more clarity on this next quarter.

Let’s look at some interesting comments from the earnings call:

AI, AI, AI

Lots of good data points how AI is helping Meta’s core products:

Meta AI now has more than 500 million monthly actives, improvements to our AI-driven feed and video recommendations have led to an 8% increase in time spent on Facebook and a 6% increase on Instagram this year alone. More than 1 million advertisers used our Gen AI tools to create more than 15 million ads in the last month. And we estimate that businesses using image generation are seeing a 7% increase in conversions and we believe that there's a lot more upside here.

Meta’s new approach to content ranking will likely act as tangible lever of growth in terms of time spent and surfacing more relevant content for the users, but it’s going to be probably a multi-year journey:

Previously, we operated separate ranking and recommendation systems for each of our products because we found that performance did not scale if we expanded the model size and compute power beyond a certain point. However, inspired by the scaling laws we were observing with our large language models, last year, we developed new ranking model architectures capable of learning more effectively from significantly larger data sets.

To start, we have been deploying these new architectures to our Facebook ranking video ranking models, which has enabled us to deliver more relevant recommendations and unlock meaningful gains in launch time. Now we're exploring whether these new models can unlock similar improvements to recommendations on other services. After that, we will look to introduce cross-surface data to these models, so our systems can learn from what is interesting to someone on one surface of our apps and use it to improve their recommendations on another. This will take time to execute and there are other explorations that we will pursue in parallel.

However, over time, we are optimistic that this will unlock more relevant recommendations while also leading to higher engineering efficiency as we operate a smaller number of recommendations.

Llama

Meta again explained how their “open” approach is going to benefit them in the long run:

There's sort of the quality flavor and the efficiency flavor. There are a lot of researchers and independent developers who do work and because Llama is available, they do the work on Llama and they make improvements and then they publish it and it becomes -- it's very easy for us to then incorporate that both back into Llama and into our Meta products like Meta AI or AI Studio or Business AIs

Perhaps more importantly, is just the efficiency and cost. I mean this stuff is obviously very expensive. When someone figures out a way to run this better if that -- if they can run it 20% more effectively, then that will save us a huge amount of money. And that was sort of the experience that we had with open compute and part of why we are leaning so much into open source here in the first place, is that we found counterintuitively with open compute that by publishing and sharing the architectures and designs that we had for our compute, the industry standardized around it a bit more. We got some suggestions also that helped us save costs and that just ended up being really valuable for us.Here, one of the big costs is chips -- a lot of the infrastructure there. What we're seeing is that as Llama gets adopted more, you're seeing folks like NVIDIA and AMD optimize their chips more to run Llama specifically well, which clearly benefits us. So it benefits everyone who's using Llama, but it makes our products better, right, rather than if we were just on an island building a model that no one was kind of standardizing around the industry. So that's some of what we're seeing around Llama and why I think it's good business for us to do this in an open way.

One thing that really stood out to me was Meta mentioned they are helping the public sector to adopt Llama across the US govt which assuages my concerns related to potential unfriendly regulations to open source approach that are being lobbied strongly by some of the companies pursuing closed approach models:

This quarter, we released Llama 3.2, including the leading small models that run on device and open source multimodal models. We are working with enterprises to make it easier to use. And now we're also working with the public sector to adopt Llama across the U.S. government.

Llama 4 (the smaller one) is coming early next year:

The Llama 3 models have been something of an inflection point in the industry. But I'm even more excited about Llama 4, which is now well into its development. We're training the Llama 4 models on a cluster that is bigger than 100,000 H100s or bigger than anything that I've seen reported for what others are doing. I expect that the smaller Llama 4 models will be ready first, and they'll be ready -- we expect sometime early next year.

It seems pretty clear to me that open source will be the most cost-effective, customizable, trustworthy performance and easiest to use option that is available to developers.

Facebook, and Instagram

On Facebook, we continue to see positive trends with the young adults, especially in the U.S.

In the third quarter, we continue to see daily usage grow year-over-year across Facebook and Instagram, both globally and in the U.S. On Facebook, we're seeing strong results from the global rollout of our unified video player in June.

Since introducing the new experience and prediction systems that power it, we've seen a 10% increase in time spent within the Facebook video player. This month, we've entered the next phase of Facebook's video product evolution. Starting in the U.S. and Canada, we are updating the stand-alone video tab to a full screen viewing experience, which will allow people to seamlessly watch videos in a more immersive experience. We expect to complete this global rollout in early 2025.

Within Facebook, video engagement continues to shift to short form following the unification of our video player, and we expect this to continue with the transition of the video tab to a full screen format. This is resulting in an organic video impressions growing more quickly than overall video time on Facebook, which provides more opportunities to serve ads.

Across both Facebook and Instagram, we're also continuing our broader work to optimize when and where we should show ads within a person's session. This is enabling us to drive revenue and conversion growth without increasing the number of ads.

As you can see, there are a number of growth levers Meta is working on. Each of these levers will probably only add a couple of points of growth, but in aggregate they can really add up over time.

For WhatsApp, the U.S. remains one of our fastest-growing countries, and we just passed a milestone of 2 billion calls made globally every day.

The other element of revenue on WhatsApp, I would say, is paid messaging that continues to grow at a strong pace again this quarter. It remains -- in fact, the primary driver of growth in our Family of Apps other revenue line, which was up 48% in Q3, and we're seeing generally a strong increase in the volume of paid conversations driven both by growth in the number of businesses adopting paid messaging as well as in the conversational volume per business.

Threads

Threads Monthly Active Users over time:

3Q’23: 100 Million

4Q’23: 130 Million

1Q’24: 150 Million

2Q’24: 200 Million

3Q’24: 275 Million

They are seeing 1 million sign-ups per day. Engagement is growing as well. In Q3, they saw strong user growth in the U.S., Taiwan and Japan.

Threads monetization is unlikely to happen in 2025. But Threads is another “margin of safety” in Meta’s growth trajectory. Given how MAU keeps accelerating every quarter, it is likely they may reach the coveted 1 Billion MAUs sometime in 2026 after which I think Meta will start monetizing Threads. Once this ad inventory becomes available, that’s gotta be bit of a tailwind for Meta sometime in 2026-27.

AR/VR

Meta mentioned their newly launched limited edition Meta Ray-Ban glasses sold out almost immediately and currently “trading” online for over $1,000. Good signs!

VR got very limited attention in the call. Quest 3 will probably do really well during the holiday season, but if this doesn’t lead to better engagement and retention post-Christmas, I suspect Meta may get its “efficiency” religion in VR and re-allocate some resources to move faster so that they can launch “Orion” before Apple gets there.

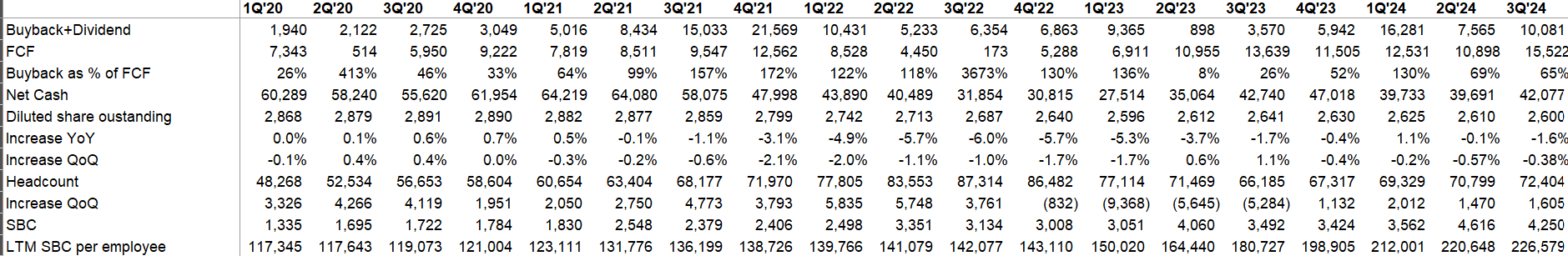

Capital Allocation

Meta generated $15.5 Billion FCF and returned $10 Billion cash to shareholders via dividend and buyback. They also completed a debt offering of $10.5 billion in Q3.

LTM SBC per employee is now at $226k. Mr. Zuckerberg is clearly quite generous with his employees!

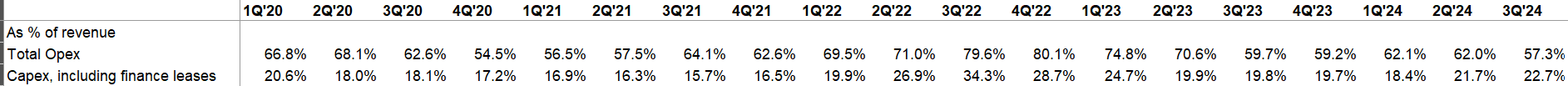

Capex and Opex

Opex guide for 2024 was narrowed to $96-98 Billion (vs 96-99 Billion)

Capex guide range was again tightened to $38-40 Billion (from $37-40 Bn in 2Q’24, $35-40 Bn in 1Q’24, and $30-37 in 4Q’23). However, in the first 9 months, they only spent ~$24 Billion. So Meta is going to ~$15 Billion in capex in Q4:

We continue to expect significant capital expenditure growth in 2025. Given this, along with the back-end weighted nature of our 2024 CapEx, we expect a significant acceleration in infrastructure expense growth next year as we recognize higher growth in depreciation and operating expenses of our expanded infrastructure fleet.

Consensus capex estimate is still $47 Billion. I suspect it’s going to be closer to $50 Billion capex in 2025.

Outlook

4Q’24 topline guide is $45-48 Bn. Mid-point YoY growth is ~15.9% (vs consensus estimates of $46.2 Billion)

Closing Words

At the end of his prepared remarks, Zuckerberg said the following:

This may be the most dynamic moment that I've seen in our industry, and I am focused on making sure that we build some awesome things and make the most of the opportunities ahead.

For a change, Meta seems quite well positioned during a potentially seismic shift in the tech landscape. Meta still needs to execute well, but nothing really happens in a straight line.

Notes from the follow-up call here.

For more in-depth analysis on Meta Platforms, you can read my analysis here (February, 2024).

I will cover Amazon’s earnings tomorrow. Thank you for reading.

If you are not a subscriber yet, please consider subscribing and sharing it with your friends.

Disclaimer: All posts on “MBI Deep Dives” are for informational purposes only. This is NOT a recommendation to buy or sell securities discussed. Please do your own work before investing your money.